Structure from Motion

For my independent study project, I implemented a Structure from Motion (SfM) pipeline using an RGBD camera. The goal was to eventually get a dense 3D reconstruction of the environment. The SfM (or Visual SLAM) pipeline has 2 main parts:

- Odometry Estimation: The estimation of the camera motion using the RGBD camera.

- Loop Closing: The detection of when the camera revisits a location and the optimization of the map.

The SfM method I implemented results in a sparse point cloud of map points. To then get a dense point cloud, I use the camera poses output from SfM and fuse the depth maps from each frame to get a dense point cloud.

Odometry (Tracking) Estimation

The step in Structure from Motion is the estimation of the camera motion as part of the Tracking or Frontend step. Motion is estimated in a 7 step process:

- Identify ORB features in the current frame.

- Match the ORB features with the previous frame.

- Estimate motion using PnP + RANSAC. Eliminate the outlier matches that are not consistent with the motion.

- Add new map points from the current frame.

- Project map points in the local area to the current frame.

- Match the projected local map points with still unmatched features/map points in the current frame.

- Refine the motion estimate using the new matches.

![]() A diagram of the tracking process

A diagram of the tracking process

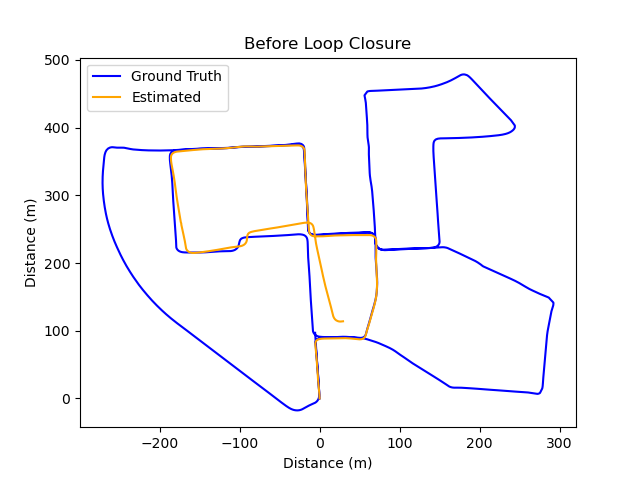

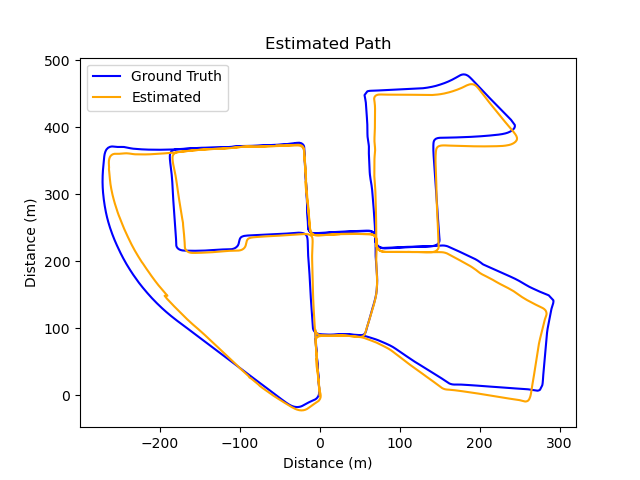

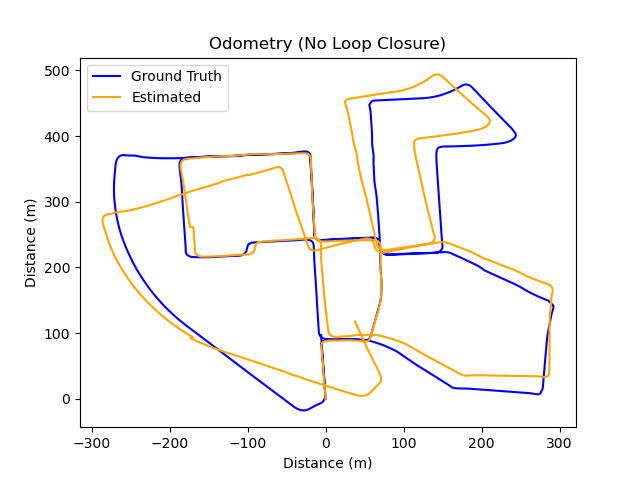

This tracking process provides us with an odometry estimate of the camera motion akin to using encoders. Consequently, this means it drifts over time, as illustrated by a complete run on the KITTI dataset zero below.

Loop Closing

To rectify this drift and enhance the precision of the position estimate, we can implement Loop Closing, a component of the Backend in SLAM. Loop Closing involves detecting when the camera revisits a previously visited location.

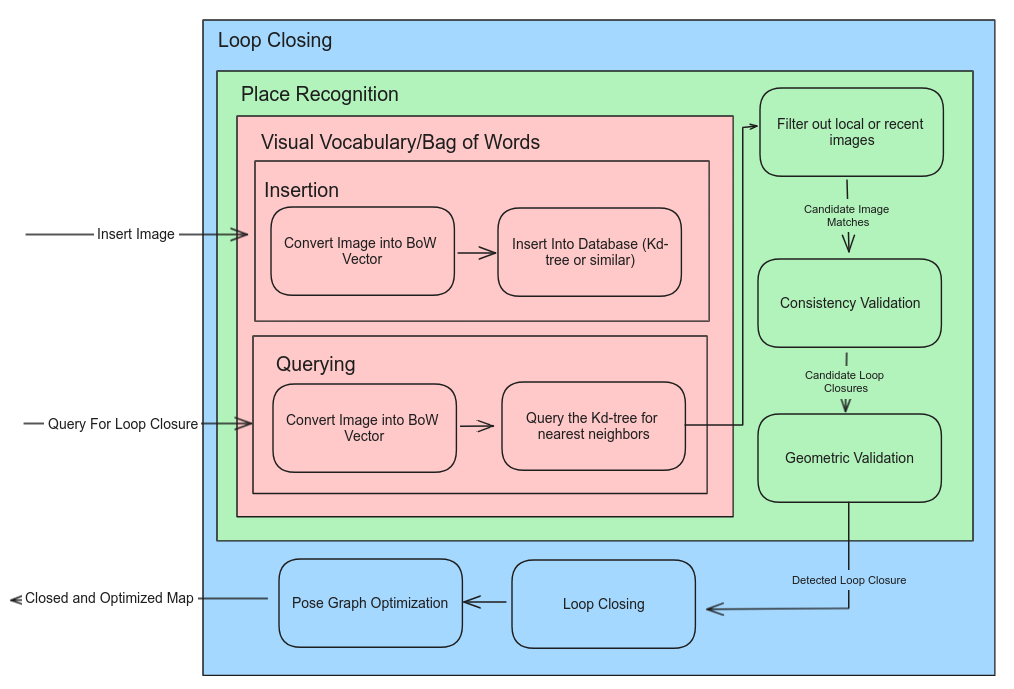

Before we can loop close and optimize the map, we need to detect when the camera revisits a location. I built a place recognition module on top of a Visual Vocabulary and Bag of Words database (the DBoW2 library) that allows me to quickly query the database for similar images. From the query we get several similar images, to identify loops is then a 3 step process:

- Remove any images that were seen recently. For example the previous frame will have a high similarity score.

- For the camera to have looped, the same place must be consistently registered over multiple frames. This is the consistency validation.

- For any consistent matches, we then must perform geometric verification. Match features between the current frame and the match, then robustly esimate motion with RANSAC, and if there are enough inliers we have identified a loop.

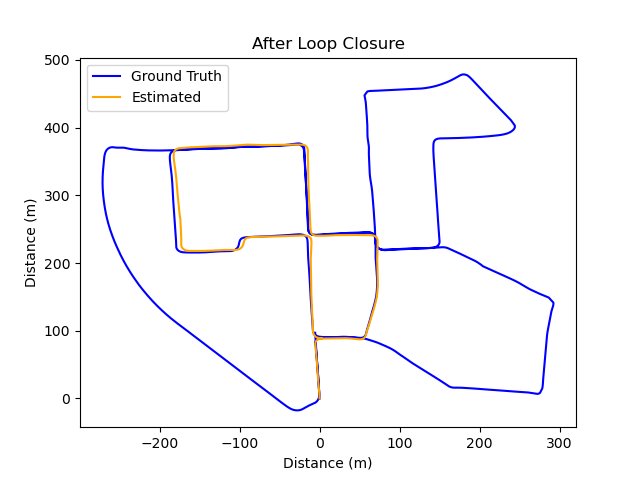

After we have identified a loop, the loop is closed by fusing the duplciated map points and features in the current frame with the looped frame. This creates and edge in the graph. The graph is then optimized using Pose Graph Optimization. I used the ceres solver to optimize the graph. This process results in drift being corrected as shown in the before and after images below.